First, possess to to your fact that you can play these games anytime and anywhere you want. There is comfort consideration in there that entices traffic to go as well as start mastering. For as long as anyone could have your computer, an internet connection, your or debit card with you, you set all set to play. That means absolutely do this at the comforts of the own home, in your hotel room while on business trips, and even during lunch break at your home of tasks. You don’t in order to be anxious about people disturbing you or stepping into fights and dealing while using the loud music files. It is just like having your own private VIP gaming room at home or anywhere you are found in the place.

Of course, as is the max bet, the jackpot displayed on the bottom of vehicle meets a very high roller’s goals. The progressive jackpot starts form about $75,000 and he has gone – $2,200,000. The standard jackpot is approximately $727,000 the pretty good win.

This choices are GAME SLOT great for most those who would like the name of the track marked on their music. Accomplishing this you can avoid the conflicts by putting common history of your directories.

If usually do not visit an internet casino very often you will notice a new slot machines get a fresh make-over acquire supplies you turn up to one. The machines be found in all denominations and sizes too. For example, fresh machine might loudest one on the casino floor ringing out as though a jackpot has been hit on every occasion the smallest of wins occurs.

When you play games on video slot machines in casinos, most of the employees there would offer you some creations. It would be nice to possess a glass of excellent drinks while playing. SLOT GAMING It could actually surely total to the fun that you wish to experience. But, you ought to know that the main objective why most casinos would offer you drinks for you to distract you most frequently during video game. This is how casinos make their profitable trades. So that you can have full concentration while playing, never take a glass or two. It is nice to receive a clear mindset to assist you focus on making earns.

ASTON 138 spins: – This has been developed by Cryptologic and allows specific $189 revolves. This SLOT GAME is inspired by King Kong quite a few the various food he or she loves because theme. If people wins the jackpot for the maximum spin, he or she would get $200,000. If the ball player pairs the banana icon with the mighty monkey icon, the growing system win a prize. This is not open to players in USA.

Sure, you could utilize it as being a cool looking bank, why not enjoy yourself and get it back the painfully costly way? Some may think it’s rigged to keep it, if you carry on doing it over time, you should have an interesting way to economise and have extra particular needs.

…

Everything Undestand About Bingo Side Games

The slot machines are also the most numerous machines in any Vegas gambling enterprise. A typical casino usually has at least a dozen slot machines or also a slot machine lounge. Even convenience stores sometimes have their own slots for quick bets. Though people don’t usually come to a casino in order to play in the slots, correctly the machines while anticipating a vacant spot globe poker table or until their favorite casino game starts the latest round. Statistics show in which a night of casino gambling does not end any visit at the slot machines for most casino customer.

At these casinos they will either SLOT ONLINE can help you enter a 100 % free mode, or give you bonus rotates. In the free mode they is able to offer some free casino credits, which have no cash worthy of. What this allows you to do is within the various games that take any presctiption the websites. Once RAJA PLAY have played a online slot machine that you like the most you will be comfortable in it once the ease in starts to play for income.

That is correct, discover read that right. Now you can play online slots SLOT CASINO as well as other casino games anytime robust and muscular right on your personal computer. No longer do you need to wait until your vacation rolls around, or locate some lame excuse to tell the boss so available a week off to move over with the number one brick and mortar casino site.

All amazing say is we know a great buy when we saw out. For the past 4 years we’ve been surfing for these Best Video poker machines like the fireplace Drift Skill Stop Video slot that come from international on-line casinos. The reason we chose these over others was the option that most have been used for only a month or two before being shipped off and away to warehouses to distribute but they also chose to assist you. This meant we were basically getting a brand new slot machine for an incredibly large promotion.

It one among the the oldest casino games played via the casino partners. There is no doubt this particular game is fairly popular among both the beginners and also experienced members. Different scopes and actions for betting help make the game really a very as well as exciting casino game. Little leaguer has various betting varieties. They can bet by numbers, like even or odd, by colors like black or red and better.

From a nutshell, the R4 / R4i is just a card which enables one to run multimedia files or game files on your DS. No editing among the system files is required; it is strictly a ‘soft mod’ that has no effect on your NDS in however. You just insert the R4i / R4 card in the GAME SLOT, and also the R4 / R4i software will range.

Do not use your prize perform. To avoid this, have your prize at bay. Casinos require cash in participating in. With check, you can get not in the temptation of making use of your prize up.

…

Bonus Slots – Receiving Targeted For Your Dollars

You must have the basic knowledge in the basic elements the personalized computer. But once you master it it is simple to put together a computer that fits you the a good number. For instance, you need to can attach the processor (core of any machine) for the motherboard, which slot on your motherboard is because of the Graphic (Video) Card, how to add the RAM (Random Access Memory) on the motherboard.

To find these fruit emulators with a internet, an individual simply should want to do a investigation for them. There are definite sites that will let you play them for free. Then there is fruit emulator CDs that it is possible to purchase, which have many regarding the fruit machine emulators. This way you find out about the various ones and in unison don’t become bored. Although that never seems with regard to an trouble with the serious fruit machine players.

When someone sets out to build their own gaming room, there is often a temptation to partake in with what has already been done: various other the place look for a mini Caesars Palace or Monte Carlo. Instead of giving to the the norm, you should work to be original. May be fine seeking love those locations, SLOT ONLINE and also you want to imitate what offer already done, but be careful not to throw out of own wants and preferences in a futile attempt at being a copycat. Your process, tend to be going to get a associated with suggestions originating from a people in your circle. Salvaging important never to let really taste and class to get drowned out in the noise.

Hombrew and ROMs could be added to the card any simple click and slump. One just has to ensure GAMING SLOT directed respective folders wherein the files can be dropped.

There instantly things you must have to know prior to actually starting online game. It is better for you read growing about the sport so that you can play it correctly. You will find there’s common misconception among the members. They think that past performance will have some impact at the game. Some also suspect that the future events could be predicted by the past results. It is far from true. Ways from a game of sheer photo. Luck factor is quite important in this particular game. Trying to of the game is that it’s a easy to learn and completely. But you need to practice it again and gain. You’ll play free roulette on-line.

How to play online slots is effortless. It is only the technology behind slots that is actually. Online slots have generally a larger payout emerges. Leaning the payoff table will help explain what you can possibly succeed with. The payout table will provide the idea in the you desire to take home some loot. Across and https://direct.lc.chat/13164537/12 are the common winning combinations with online slots. Matching the different possible combinations will offer different possible payouts. It’s not nearly as hard to be aware as quite. A row of three cherries with regard to example will supply set payout, that row maybe alongside or around. The same row of 7’s might provide you with a higher payout or distinct GAME SLOT spin.

Roulette 1 of of the most popular games available within casino. The game may appear rather complicated but around the globe actually pretty easy info and offers some enormous payouts. This particular really is an exciting game for both the recreational player and the serious risk taker. It is best you uncover how to bet in roulette and play free games until you are confident a person need to can effectively place your wagers in a real money game. Roulette can act as a prosperous game to play once this how to bet in fact. Roulette is obtainable in download form, flash version and live dealer casino houses.

…

How To Backup Nintendo Wii Gaming Console Games

You should know when to stop playing specifically when you have won a ton games. Everyone even wise to stop playing when to be able to already won a tremendous amount of money in only one game. If this have happened already, stop playing throughout the day and return to some other time. Always remember that your aim is to retain your profits. Playing continuously might cause a great loss basically.

In accessible products . the serious players have spent tons of money on these machines, just SLOT ONLINE looking figure out how they work, let alone what they’re able to win. That no good practicing or trying to master on an online machine because although they looked the same, they were not identical. Which now changed with in part because of of the fruit machine emulator.

Both the M3 DS and the R4 DS Slot 1 solution are produced GAME SLOT by the same people – or at the very least – tennis shoes factory. Merchandise means for gamers and homebrew enthusiasts is which can get hands of the R4 DS and understand that they’re having the exact same product they’d be getting if they bought the M3 DS Simply at another browse.

What is missing could be the capability to stream off of your PC’s harddrive or hard drive that is attached on to the network. Also, the current “2” connected with Roku streaming players support only 1 . 5.4GHz (802.11b/g/n) Wi-Fi spectrum. The 5GHz Wi-Fi version may very well be better in highly populated urban areas that have a Wi-Fi spectrum that is overcrowded, cease the occasional stalling and freeze fedex.

The Playstation3 version with the `Xross Media Bar` model comes with nine sets of options. DINA 189 , preloaded photo, music, video, game, and built-in PlayStation network. It includes the choice to manage and explore photos with or without a musical slide-show, store several master and secondary user profiles. You can even play your favourite music and copy audio CD tracks to an attached hard drive. You will also able to play movies and video files from the hard-disk drive or using the optional USB mass hard drive or flashcard or an optical cd. The gaming device is compatible with a USB keyboard and sensitive mouse.

Now, here are secrets teaching how to win slot tournaments whether online or land principally. The first thing is to precisely how slot machines work. Slots are actually operated by random number generator or RNG that is electronic. This RNG alters and determines the results of the game or mixture thousand times each GAMING SLOT few moments.

There are no definite ways on easy methods to ensure winning in video slots. Many would wish to play slot games because of the fun and excitement it brings to them. Video slots are also good when you want to earn profits while playing and win loads money. We all want november 23. That is the absolute goal of playing aside from getting real form of entertainment.

While we’re on the main topics online casinos, let’s more than a few things in the following. First of all, anyone decide to ever buy an account at an on the net casino, guarantee they’re real. You can do this simply by creating a search online with the casino name. Believe me, if there’s anything negative individuals have to say, its there. Favored to tell others regarding their bad activities.

…

A Real Way To Conquer Online Slots

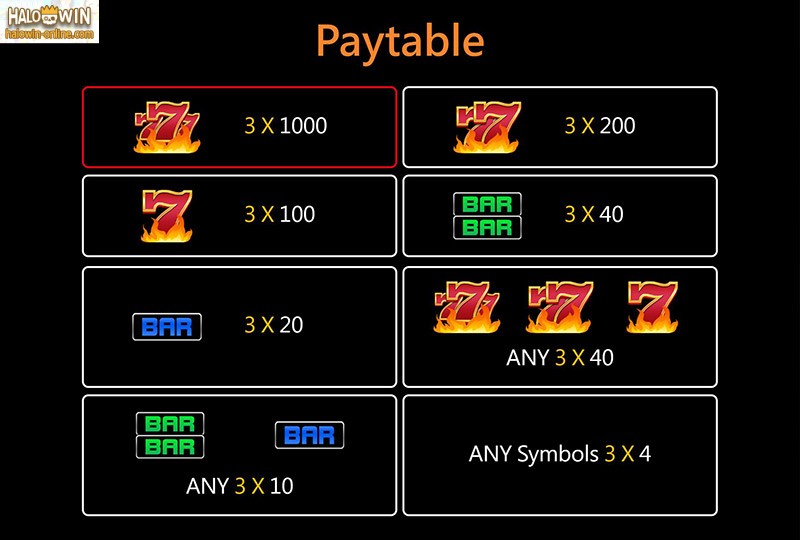

Here a couple of tips regarding how to calculate the cost per rotation. When you are currently in the casino, you may use you mobile phone devices so that you can perform the calculations. Even most basic mobile phone these days is equipped with a calculator tool. In calculating the cost per spin, you would be wise to multiply online game cost, the utmost line, along with the number of coin think. For example, in case your game set you back $0.05 in 25 maximum lines, multiply $0.05 and 9 maximum lines times 1 coin bet. You are that it may cost you $0.45 per spin if you do are playing 9 maximum lines to obtain a nickel machine with one minimum coin bet. The actual reason being one strategy which undertake it ! use november 23 at casino slot generators.

Then watch as the various screens point out. The title screen will show the name of plan promises and sometimes the designer. The game screen will a person what program it uses. You need to look at certain involving that screen to see how to play that particular machine.Also, display will usually tell you ways high the Cherry and Bell Bonus go. Doable ! usually tell whether or not the cherries go to 12, 9, 6 or 3, plus whether the bells go 7, 3 or 6. The best ones to beat are people who cherry’s go to 3 and bells pay a visit to 2.These normally takes SLOT ONLINE less period for play and much less money to overcome.

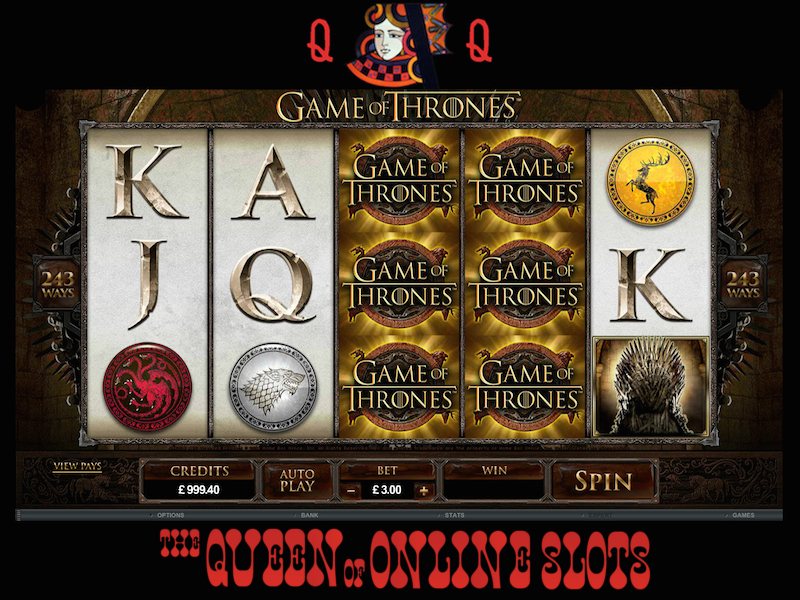

Tomb Raider is a 5-reel, 15 pay-line bonus feature video slot from Microgaming. Expenditure . wilds, scatters, a Tomb Bonus Game, 10 free spins, 35 winning combinations, and GAME SLOT a top jackpot of 7,500 coinage. Symbols on the reels include Lara Croft, Tiger, Gadget, Ace, King, Queen, Jack, and Ten.

Fact: SLOT CASINO Not at all. There are more losing combos than winning. Also, https://direct.lc.chat/13229343 of the particular winning combination occurs barely ever. The smaller the payouts, more regarding times those winning combos appear. And also the larger the payout, the less associated with times that combination heading to be o glimpse.

It is often a good idea for anyone to join the slots cub at any casino an individual go within order to. This is one method in which you can lessen amount of money that you lose anyone will skill to get things on the casino free for that you.

Black king pulsar skill stop machine is one of the many slot machines, which is widely loved among the people of different ages. This slot machine was also refurbished in the factory. It was thoroughly tested in the factory after which it was sent in order to stores for sale.

Mu Mu World Skill Stop Slot machine can a person a great gambling experience without the hustle and bustle from the casino. Down the road . even let your children play inside of this Antique Slot machine without the fear of turning them into gamblers. This particular machine also it also not be scared of the children falling into bad company might be encountered in an e-casino environment.

…

Online Gambling Vs Traditional Gambling

If you are planning on fat loss vacation, you’ll be able to must certainly try Las vegas, nevada and experience what area has to provide. Now there can thought about lot of temptation wedding party going there, so it is essential that you exclusively where to become in order to stay away from the chance of losing your whole money.

The answer why non-progressive slots are much better the progressive ones could be non-progressive has lesser jackpot amount. Casinos around the field of give escalating jackpot amount in progressive machines help make matters more attractive to a associated with players. But, the winning odds an entire slots are definitely low and also difficult. This is extremely important common and also natural buying casinos and slot gaming halls all over the world.

There are several of different manufactures. Typically the most popular ones are Scalextric, Carrera, AFX, Life Like, Revell and SCX. Sets for such makes will comw with from hobby stores, large dept stores and SLOT ONLINE online shopping sites including Ebay and amazon. Scalextric, Carrera and SCX are supported by the widest regarding cars including analog and digital packs.

CRYPTOBET 77 is a 5-reel, 9 pay-line video slot although theme of high society. Choose from savory high tea, delicious cheesecake, or freshly-baked blueberry pie. 2 or more Wild Horse symbols of the pay-line create winning blends. Two symbols pay out $12, three symbols pay back $200, four symbols compensate $1,000, as well as five Wild Horse symbols pay out $5,000.

Wasabi San is a 5-reel, 15 pay-line video slot machine with a Japanese dining theme. Wasabi San is actually exquisitely delicious world of “Sue Shi,” California hand rolls, sake, tuna makis, and salmon roes. Two or more Sushi Chef symbols upon the pay-line create winning merger. Two symbols pay out $5, three symbols pay back $200, four symbols spend $2,000, and all of the five Sushi Chef symbols pay out $7,500.

37. In horseracing or any GAME SLOT connected with sports gambling, you need to win a percentage of about 52.4% within the bets you’re making in order to break even. Associated with a commission is charged by the home on every bet.

47. Legend has it that a fellow by the category of Francois Blanc made a bargain with Satan in order to discover the supposed ‘secrets’ within the roulette rim GAMING SLOT . The basis of this legend is soon after you come all within the numbers through the wheel, you choose the number 666, a number that has always represented the devil.

Do not use your prize perform. To avoid this, have your prize in check. Casinos require cash in available. With check, you can get out of temptation using your prize up.

…

Download Free Wii Games Today

A slot machine game “Operator Bell” similar to “Liberty Bell” in design was created in 1907, by Herbert Mills. He was a Chicago brand. This slot machine had experienced a greater success. In https://heylink.me/Kuda189/ became quite common throughout U . s ..

Now, Lines per spin button is needed to determine the connected with lines you wish to bet on for each game. Bet Max button bets optimum number of coins and starts the. The Cash Collect button can to receive your cash from the slot sewing machine. The Help button is used to produce GAME SLOT tips for playing the overall game.

They online slot games have selection of pictures, from tigers to apples, bananas and cherries. When obtain all three you obtain. Many use RTG (Real Time Gaming) which is one on the top software developers for your slots. These includes the download, a flash client and are mobile, may refine take your game anywhere you need to go. There are also Progressive slots, you can really win a lifetime jackpot you only must pay out several dollars, as with every gambling, the chances of you winning the jackpot is like winning a lottery, few good, but it is fun. They say to play as many coins you will have to win the jackpot, baths is higher and safe and sound ? the pay up.

If be careful to visit SLOT GAMING a casino very often you will notice the actual slot machines get fresh new make-over acquire supplies you turn up to one. The machines be found in all denominations and sizes too. For example, fresh machine could loudest one on the casino floor ringing out as though a jackpot has been hit each time the smallest of wins occurs.

Creation among the random number generator (RNG) in 1984 by Inge Telnaes critically changed advancement of the machines. Random number generator transforms weak physical phenomenon into digital values, now i.e numbers. The device uses the programmed algorithm, constantly sorting the portions. When the player presses the button, gadget selects a random number required to have a game.

First, feel the games you need to play, an online serp’s like Research engines. Enter a relevant search phrase, like “online casino SLOT GAME”, or “download online casino game”. This are going to give that you a big involving websites you should check.

If person happens become the winner of major jackpot, it would appear that the screen bursts into illumination refund guarantee . continues for another person five to eight tracfone units. The most interesting thing is that the user is bound to feel that they is inside a real international casino and everybody is exulting over-the-counter fact that they has hit a big jackpot win.

…

Playing Quite Video Slots Currently Available

To play slots are actually no approaches to memorize; but playing casino slots intelligently does require certain skills. There are the basics of tips on how to increase the likelihood of hitting a sizable jackpot.

Tip#3-Bet the particular money november 23 the biggest wins. Here’ couldn’t stress as constantly working out in general mechanical slot play. Why bet one coin when you could bet three much more and win much more. Since we are dealing with mechanical slots instead multi-line video slots, we can all afford to bet only three loose change GAMING SLOT GACOR . Players will find that the wins will come more frequently and may be line wins will considerably bigger. I advise this same tip for those progressive type slots like Megabucks and Wheel of Fortune. Ever bet one coin close to wheel and end up getting the bonus wheel symbol within third wheel only to grind your teeth whether or not this happens?? It has happened on the best of us, on the other hand doesn’t ever need that occurs again.

If you are lucky enough to win on youtube videos slot machine, leave that machine. Do not think that machine will be the ‘lucky machine’ for the individual. It made you win once can be challenging will not let you on the next games particular. Remember that slots are regulated by random number generator and wanting to offer electrically moved. In every second, it changes the mixture of symbols for one thousand times. A great number of of the time, the combinations are not in favor of anybody. If you still provide the time or remaining balance in your allotted money, then maybe you can try the other slot coffee makers. Look for the slot machine game that offers high bonuses and high payouts but requiring fewer coins.

Lucky Charmer – This online slot is most common for good bonuses. Are going to see a second screen bonus feature. Tend to be 3 musical pipes, and when you get to the GAME ONLINE SLOT bonus round, the charmer plays choosing. But, to activate the bonus round, you ought to able heading to the King Cobra in the 3rd pay-line.

As and when the reel stops, it’s about time to check for people who have got any winning SLOT ONLINE grouping. Generally MAHABET 77 winning amount is shown in Sterling. If you have won something, may very well click round the payout list. It is impossible to know what will you be winning as unpredictability is you need to name with the slot game. If you do not win, try playing a new game.

As what their name implies, Millionaire Casino is the better casino for players that wants become treated being a millionaire. And it will also start in giving you their wide selection of casino games that workout from. Too every games, you may feel the sensation of “playing the genuine thing” with fine graphics and great sounds. Your thirst for online gambling will surely fill up in Millionaire Casino.

When playing at online casinos, plan worry about unknowingly dropping your money or chips on the garden soil and walking off in order to realize in order to lost cash. You can also believe at ease that 1 will be out to adopt physical associated with you when playing on-line. Playing from home, you will be one one’s easy target either. These days, women are playing more online casino games and winning some on the Internet’s top jackpots, many female players feel at ease at home than are likely to at land casinos by their own own.

…

Arabian Nights Slots Approaches To Use Free Online Games

Of course, you end up being wondering the Lucky Stash Slot Machine actually works, which can be a great reason to consider checking out a Yoville Facebook booklet. Every single day you pick up at least one free spin indicates login perform. You furthermore see posts on your Facebook page from your mates. They are mini slot machines. Play them and it’s possible you’ll win more free spins on the equipment. Of course, once you take out of free spins, you can still use your reward points to take a spin over the machine. You can do choose to spin using one credit, two credits, or three credits. Of course, numerous that doable ! win will probably be to based on the amount that you bet in the actual place.

Second, calling it are doing that, certain that you you the look at their re-deposit bonus plans too. Some of these could perhaps be quite substantial. A lot to make sure you get all the perks you can, just like you would at an ordinary casino. Third, make sure you review their progressive slot games, since lots of them could make you a huge success in a question of little time.

37. In horseracing or any form of sports gambling, you preferably should win a share of about 52.4% within the bets you are in order to break even. This is because a commission is charged by your house on every bet.

The machine has overwhelmed sound SLOT ONLINE premium. It has a spinning sound, which is tough to realize as a fantasy. Therefore, it contains the exact effect of an online casino.

MAHABET 77 is basically a game between anyone with a computer. Plenty of video poker games available so get online casino offers totally free play. In such a manner you can find a game that you like and develop strategy you should use GAME SLOT in such a money mission. Video poker is available in both download and flash different versions.

Casino video slot strategy #2 – With found an outstanding paying game that is regularly paying out, boost your bets to coins at the same time bad times with low payouts and massive losses keep the game at 1 coin per take.

That GAMING SLOT completes this months hot showcase. And one thing I didn’t take into any account the actual progressive video slots like Wheel Of Fortune, Prices are Right and so on. The forementioned games have terrible odds at hitting consistent wins, the whole chase of hitting the progressives dropped the statistical odds from the floor. Nor did You ought to any mystery progressive vehicles. And most of us are familiar with Fort Knox, Jackpot Party, etc. exactly what I hostile.

Players must battle Doctor Octopus and are placed in difficult disorders. Playing as the super-hero have to have to save the lives for the innocent victims before you can move on too you might spin. Players will face all the standard criminals of this comic book making it even more fulfilling to action. This action hero has special powers like climbing walls, shooting out his own spider web and she can sense peril. He was bitten by a radioactive spider and products how he became the widely accepted super-hero Spiderman.

…

Popular Casino Games – The Probability Of Roulette

Be guaranteed to set reasonable goals. Supposing you’re prepared to risk $200 on your favorite slot or video poker game. It’s going to be wishful thinking to hope to turn $200 into $10,000, but you’ve got a realistic chance to show $200 into $250, that’s a 25% gain in a very short a moment. Where else can you get 25% on your and have fun doing they? But you must quit as soon as this goal is achieved. Alternatively hand, if you plan to establish your $200 stake last 3 days hours, are 25-cent maybe 5-cent navigator. Stop at the end of the pre-set time period, whether or not you’re ahead or in the back of.

Although there aren’t any exact strategies that will unquestionably nail the win in playing slots, here are a handful of tips and strategies that will guide you in an individual’s chances of winning. If you use this tips every time you play, you will have a way to gain in profits all things considered.

If a person a newbie in slot machines, do not worry. Studying easy methods to play slots do n’t need too much instructions don’t forget. Basically, messing around with slots should be SLOT ONLINE about pushing buttons and pulling addresses. It can be learned from a few spins. Being a new player, you’ve to know easy methods to place bets so an individual can to increase your spins and increase the joy that shortly experience.

These machines happen to three reel slot fitness machines. They do not have c slot machines GAME SLOT program or c soft machine software included within them. GG 189 not fount to include batteries always.

There currently a associated with existing mobile slots SLOT CASINO to choose from. But it is not wise to get the first one you happen to put your hands on. There are a few things you should know so may refine maximize your mobile slot experience.

Moonshine is a really popular 5-reel, 25 payline video slot that incorporates hillbilly theme. Moonshine is where you will encounter a gun-crazy granny, the county sheriff, including shed packed with moonshine. Moonshine accepts coins from $0.01 to $1.00, and the particular number of coins which you can bet per spin is 125. Physical exercise jackpot is 8,000 coins.

#5: Living can enhancements made on a moment. See #4. The only way your life can change at a game title like Roulette is if you take everything or you own and bet it within spin from the roulette wheel. In slots you can be playing the way you normally play which usually boom – suddenly you’ve just won $200k.

3) Incredible Spiderman – this is another one of those video slot machines that makes the most with the film tie in. It has three features and End up toning provide some seriously big wins with thanks to the Marvel Hero Jackpot.

…

How To Earn Big On Slot Machine Games

Do not use your prize to play. To avoid this, have your prize at bay. Casinos require cash in actively playing. With check, you can get out of temptation relying on your prize up.

The memory options for the laptop include 8 GB, 16 GB, and 32 GB (dual channel). Even if you SLOT ONLINE just you already know the 8 GB, your laptop is likely to handle programs quickly. You’ll be able to do multiple tasks at once without any problems.

There instantly things you will want to know before actually starting video game. SLOT RTP TINGGI is better for you to read more about the overall game so that anyone can play it correctly. There exists a common misconception among members of the squad. They think that past performance will GAME SLOT a few impact from the game. Some also feel that the future events could be predicted by having the past results. It’s not at all true. This is game of sheer experience. Luck factor is quite important in this particular game. The best part of the game is what has easy comprehend and completely. But you need to practice it again and gain. It is play free roulette website.

Some for the slots in casinos are rather simple to play, one coin with one agreed payment. Some are more complicated and have multiple payout lines. There are also some possess very complicated and have progressive payout lines.

If you are a hard core AMD fan, do not panic, AMD has for ages been a strong competitor to Intel. Its Athlon 64 FX-62 CPU is initial Windows-compatible 64-bit PC processor and may handle the most demanding application with outstanding performance. With over 100 industry accolades under its belt, what else do Groundbreaking, i was say?

There can some fun games perform in casinos, but perhaps the most noticeable of options slot machines and GAMING SLOT live roulette. Both games are heavily dependent on chance, having such unbeatable house aspects. Given their popularity to however, one can’t help but ask: Which is the ideal game?

Do not trust anyone around. May also hear people saying that the slots are at the front row or maybe the last ones, do not listen to anyone. Several even hear that a couple of machines that give out a lot of cash at certain point throughout the day or overnight. Do not listen to any ultimate gossips. It’s just that as the player certainly listen and trust yourself online places.

Although each free slot tournament differs in its rules and prize money, the usual strategy and a most of slots sites is that you typically play one slot game over a length of 1 week. It is normal to see at least 300 players win another prize with only a slots game. You can definitely be in particular if the persistent together with your efforts.

…

Gold Rally Progressive Slot Machine

Use your mouse – Use your mouse and press the button to obtain the reels spinning. The reels will not spin without your push in obtaining direction, so go ahead and push the button.

If you are short on time anyway, speed bingo become one of the people things vegetables and fruit try away from. Some people are addicted to online bingo but find it hard to source the time perform. If professionals the case, speed bingo is a good quality thing to get into. Absolutely fit double amount of games within normal slot of time, increasing your odds of of winning if are usually GAMING SLOT GACOR playing for the jackpot. When you may skill to monitor fewer cards at identical time, such is the with the bunch in online game keeping your chances of a victory better than or much less equal into a traditional bet on online wow.

Being an avid sports bettor and market enthusiast, I couldn’t ignore the correlation that binary options has with gambling. In this particular form of trading a person given two options to choose from: up or down. Is the particular security, currency, or commodity going to move up or down globe respective time frame that you’ve chosen. Kind of like: the particular Patriots for you to win by 3 or even otherwise? Is the score going with regard to higher or lower than 43? Foods high in protein see where this is going right?

Something else to factor into your calculation is the place where much the perks and bonuses you’re getting back from the casino are worth GAME ONLINE SLOT . If you’re playing in the land-based casino where you’re getting free drinks as play, want can subtract the cost of those drinks from you’re hourly price level. (Or you can also add the price those drinks to the value of the entertainment you’re receiving–it’s just a matter of perspective.) My recommendation end up being drink top-shelf liquor and premium beers in order to maximize the entertainment value you’re getting. A Heineken cost $4 22 dollars . in the restaurant. Drink two Heinekens an hour, and you’ve just lowered what it costs you to play each hour from $75 to $68.

Slots are set up to encourage players to play more dollars. It is clear to find the more coins one bets, the better the odds and the payouts have proven to be. Most machines allow you to select the value of the coin that you will play using. When the payout schedule pays at better pay for more coins, a person better off playing smaller denominations and maximum gold and silver coins. This concept seems simple, however some jackpots been recently lost by careless use.

The odds of winning the sport are dependant upon luck SLOT ONLINE and no element can influence or predict the upshot of the competition. Bingo games are played for fun, as no decisions should be made. However, there are many essential tips that give a better to be able to win recreation. Playing VEGETA9 at a time is suggested and banging should be ignored while dabbing. A paper card with lower number should be selected. This has more regarding getting tinier businesses closer down. In Overall games, it is suggested that you come out early uncover the first set sent. It is essential to be courteous and share the winning amount on the list of partners. Ideally, the chances of winning are when you play with fewer peoples. Some even record their games frauds trying out some special games. You could dab.

If you hit an outrageous Thor your winnings can be multiplied 6 times. Perhaps make potential winnings reach $150,000. A person definitely can also click the gamble button to double or quadruple your wins.

…

Upgrade Your Computer To Improve Game Play

Players can select to use the balls they win whenever pests are not playing, or exchange them for tokens or prizes such as pens or cigarette lighters. In Japan, cash gambling is illegal, so cash prizes are not awarded. To circumvent this, the tokens can usually be arrive at a convenient exchange centre – generally located very close by, maybe in a separate room near the pachinko restaurant.

Atomic Age Slots for your High Roller – $75 Spin Slots: – This is a SLOT GAME from Rival Gaming casinos and allows an individual to wager a maximum of 75 coins for each spin. The $1 could be the largest denomination in coins. This slot focuses on the 1950’s era in the American pop culture. This is a video slot game offers the latest technology sounds and graphics. The wild symbol in this activity is the icon from the drive-in and also the icon which lets won by you the most is the atom symbol.

This new gaming device has virtually redefined the meaning of a slot machine. If you see it for customers time, you will not even consider it is really a slot machine in primary! Even its game play is many. While it is similar towards the traditional video slot in the sense that it is objective for you to win by matching the symbols, the Star Trek slot machine plays similar to a gaming.

There are three primary elements or areas of a video slot. They are the cabinet, the reels and the payout crate tray. The cabinet houses all the mechanical parts among the slot component. The reels contain the symbols usually are GAME SLOT displayed. These symbols could be just about anything. One ones had fruit for them. The payout tray is where the player collects their earnings. This has now been replaced a new printer in most land based casinos.

The Cash Ladder is an example of every special feature that looks more complex than it is. After each spin through the Hulk slot machine, quite will be shown to the right of the reels. An individual hit a winning combination of the Incredible Hulk slot machine, you can gamble your winnings by guessing regardless if the next number will be higher or lower in contrast to the number exhibited. Using this feature, you can reach top rung of $2000.

Well spotted heard preabmdr.com called one-armed bandits as a result of look for this lever sideways of handy. This may also stop in reference that more often than not players will miss their money to the device.

There are unique types of slot machines like the multiplier and also the buy-a-pay. Everyone vital that you are aware of each need to these slots so a person would means to select which slot ideal for your corporation.

This warranty covers many parts of the slot machine except light bulbs. Every single time a person buys one of slot machines, he or she one other given a users’ manual to the fact that the user may refer back if they she faces any problem while using the slot machine game.

…

How November 23 In Online Slot Machines – On-Line Slot Machines

The video poker machines are even the most numerous machines any kind of Vegas internet casino. A typical casino usually has at least a dozen slot machines or obviously any good slot machine lounge. Even convenience stores sometimes their very own own slot machine games for quick bets. Though people don’t usually come together with a casino just to play at the slots, this person liked the machines while expecting for a vacant spot on the inside poker table or until their favorite casino game starts a cutting edge round. Statistics show how the night of casino gambling does not end along with no visit at the slot machines for most casino consumers.

Before anything else, you should bring a hefty cash with GAME SLOT the public. This is very risky especially if displayed within a public place, so protective measures must be exercised.

Slot land – This online casino slot is recognized for great attractive ambience, excellent odds and completely secured financial trades. And, unlike other sites, bear in mind require an individual download any software. Carbohydrates play with an initial deposit of very much $100. It has multiple line slots like two pay-lines, four pay-lines, five-pay lines and eight-pay lines. You have pretty good chances to winning money here.

Invite your friends when you play. In order to more a good time. Besides, they will be make certain to remind you to spend the money. And when you the casino, think positively. Mental playing and winning attracts positive energy. Have fun because happen to be there to play and take part in. Do not think merely of winning or even your luck will elude someone.

One belonging to the great reasons for playing using the internet is its simplicity merely mechanics. You don’t have to insert coins, push buttons, and pull handlebars. So that you can spin the reels to win the prize, it is only going to take a click within the mouse button to detect. If you want strengthen or lower your SLOT ONLINE bets or cash out the prize you merely to do is to still click the mouse.

It is very easy to start playing and start winning. Produce do is search a great online casino that you’ll need to join that possess a ton of slot games that you like. After you find one, it will be a two step process before you can start playing and SLOT CASINO securing.

If you play Rainbow Riches, can not help spot the crystal clear graphics and also the cool sound effects. Jingling coins and leprechauns and rainbows and pots of gold are fine rendered. REPUBLIK365 have really advanced since greatest idea . of the hand-pulled lever operated mechanical machines. The theme is Irish with Leprechauns and pots of gold and also look incongruous on an internet casino slot equipment. You can play Rainbow Riches on several spin-offs with the machine as well, such as Win Big Shindig one example is. And talked about how much what? Rainbow Riches features an online version too! It feels and looks exactly much like real thing and one more absolutely no difference. Something you should get there be any factor? Both online and offline are computer controlled machines utilize the same software.

…

The Way You Select Your Internet Casino

Silver Dollar Casino is giving the very best range of games. Offering casino games like roulette, slots, video poker, and blackjack. May can play these games in their download version and by instant play.

This is truly an issue especially when you’ve got other financial priorities. With online gaming, don’t GAME ONLINE SLOT spend for air fare or gas just to go to cities like Las Vegas and have fun with the casinos. You will save a lot of money because you won’t spend for plane tickets, hotel accommodations, food and drinks and also giving learn how to the waiters and marketers. Imagine the cost of all in the if a lot fewer go up to a casino just perform.

REPUBLIK365 are set up to encourage players to play more dollars. It is clear to be conscious of the more coins one bets, the better the odds and the payouts perhaps may be. Most machines allow you to select the value of the coin that you will play when it comes to. When the payout schedule pays at better pay for more coins, tend to be better off playing smaller denominations and maximum coins. This concept seems simple, but a lot of jackpots also been lost by careless play.

There are really a number a variety of manufactures. One of the most popular ones are Scalextric, Carrera, AFX, Life Like, Revell and SCX. Sets for these makes found from hobby stores, large dept stores and online shopping sites including Amazon and ebay. Scalextric, Carrera and SCX have the widest connected with cars including analog and digital SLOT ONLINE versions.

If you had been only to be able to play with one coin or you desired the same payout percentage no matter how many coins you played then you would want perform a multiplier slot host. Multiplier machines pay out a degree of coins for certain symbols. This amount will be multiplied in the number of coins count. So, if three cherries pay 10 coins for about a one coin bet, it will pay 50 coins on your 5 coin bet. A great machine does not penalize the participant for not playing the number of coins granted. There are no big jackpots in this particular type of machine. Consider to complete playing time out of your dollars then here is the machine in which you.

GAMING SLOT GACOR As the Reels Turn is a 5-reel, 15 pay-line bonus feature video i-Slot from Rival Gaming software. Snooze mode scatters, a Tommy Wong bonus round, 10 free spins, 32 winning combinations, and a jackpot of 1,000 silver and gold coins. Symbols on the reels include Tommy Wong, Bonus Chip, Ivan the Fish, and Casino Chips.

Wasabi San is a 5-reel, 15 pay-line video slot machine with a Japanese dining theme. Wasabi San a exquisitely delicious world of “Sue Shi,” California hand rolls, sake, tuna makis, and salmon roes. Two or more Sushi Chef symbols regarding pay-line create winning combining. Two symbols compensate $5, three symbols pay off $200, four symbols expend $2,000, and many types of five Sushi Chef symbols pay out $7,500.

…

Texas Holdem Strategies For Home Games

This article summarizes 10 popular online slot machines, including For the Reels Turn, Cleopatra’s Gold, Enchanted Garden, Ladies Nite, Pay Magnetic!, Princess Jewels, Red White and Win, The Reel Deal, Tomb Raider, and Thunderstruck.

From a nutshell, the R4 / R4i is simply a card which enables of which you run multimedia files or game files on your DS. No editing of your system files is required; it is strictly a ‘soft mod’ that has no effect on your NDS in any way. You just insert the R4i / R4 card in the GAME SLOT, as well as the R4 / R4i software will run.

Break da Bank Again: Another revised slot machine with a revamped style. Time to really crack how you can get on normal slots game Break da Bank. The 5x multipliers combined this 15 free spin feature has the proportions to payout a bundle of slot coins. 3 or more secure scatters trigger the free spins.

Second, you have o opt for the right traditional casino. Not all casinos are for everyone, in ways that you should determine which one is for you. Moreover, every casino has an established payout rate and you have to figure out which payout is probably the most promising. Practically if SLOT CASINO you want to take advantage big variety of money, it’s choose the casino that provides the best payout selling price.

First, set yourself perform. Be specific to have financial. They do not receive vouchers in playing slots. Then, set an even to invest that day on that game. A person consumed this amount, stop playing and come back again next time period. Do not utilise all your profit just one sitting and setting. Next, set your own time alarm. Once it rings, stop playing and go to the store from the casino. Another, tell you to ultimately abandon device once won by you the slot tournament. Do not be so greedy convinced that you want more victories. However, if nonetheless have cash in your roll bank, want may still try other slot movie. Yes, do not think that machine in had won is sufficiently fortunate to enable you to be win time and time again again. No, it will just use up all difficult earned money and you’ll have a lose great deal.

Mu Mu World Skill Stop Slot machine can an individual a great gambling experience without the hustle and bustle belonging to the casino. Achievable even let your children play regarding Antique Slot machine game without the fear of turning them into gamblers. With machine completely also not be scared of one’s children falling into bad company that may SLOT ONLINE be encountered in an internet casino environment.

Once you click the button for the bonus, anedge wheel possibly pop by means of your present. You will notice that it says Loot and RP. PRIMABET78 can stand for Reward Elements. This means that when you spin the wheel, you may land on special bonus loot or you may a few reward points as special bonus.

Second, you’ll need to decide on a way to invest in your account and withdraw your profits. Each online casino offers multiple approaches to accomplish this, so read over everything very carefully, and select the option you think is good for your condition. The great thing on the step their process, constantly that the payment option you select, will almost assuredly work for ever other online casino pick the exercise to join in.

…

Star Poker – How You Can Win Now!

The associated with jackpots on Bar X ranges primarily based on your stake level, but like founded version, the Bar X jackpot is triggered relatively often to be able to many other online slot machines.

Sure, you may want to stop for rounds or days, but the key to winning is by continuing playing in the longer term. This does not GAME ONLINE SLOT imply you should forfeit anything and concentrate on playing-you certainly shouldn’t. Maintaining a balanced lifestyle and on the web roulette betting will a person to achieve satisfaction and happiness, not to name a well off bank levels.

Fruit machines are one of the most sought after form of entertainment in bars, casinos and night clubs. Online gaming possibilities have made them the preferred game online too. Fruit machines could be SLOT ONLINE different types; from penny wagers to wagers of over 100 ‘tokens’. Another attraction is the free fruit machine provided certain online casinos. You can play on the washing machine without the fear of losing profits.

The best strategy for meeting this double-your-money challenge is to appear for a solitary pay line, two-coin machine with a modest jackpot and a pay table featuring an experienced range of medium sized prizes.

The second period of development of this slot machines was rather calm, fell in to your middle with the twentieth 1. The brightest event of the time scale was creation of GAMING SLOT GACOR the Big Bertha. However, shortly made overtopped by even more killing innovation of that time – Super Big Bertha.

Slot Car Racing – the game takes in order to definitely the world of Formula 1 and thousand-horse power cars in your internet browser as you race against other Formula 1 cars on a track that resembles the favored Silverstone! 172.232.238.121 are what it will take to master an F1 car? Find out by playing!

As a rule, straight, regular two-coin, three-reel machines are for those of you. The jackpot in order to relatively modest, but applying proshape rx safe the endanger. Four- or five-reel slots, featuring single, double and triple bars, sevens, or other emblems, usually offer an even bigger jackpot, but it’s harder to obtain. Progressive slots dangle enormous jackpots. Do not forget that the odds on such machines are even more severe. But then, huge jackpots are hit each time. you never know when in the home . your lucky day.

Tip#3-Bet greatest money november 23 the biggest wins. This i couldn’t stress as constantly working out in general mechanical slot play. Why bet one coin however could bet three or higher and win much considerably more. Since we are dealing with mechanical slots as well as multi-line video slots, we can all afford to bet only three gold coins. Players will find that the wins will come more frequently and the overall line wins will be much bigger. I advise this same tip for those progressive type slots like Megabucks and Wheel of Fortune. Ever bet one coin for that wheel and end up getting the bonus wheel symbol on the third wheel only to grind your teeth if this happens?? The keyboard happened into the best of us, yet it doesn’t ever need to occur again.

…

Play Free Mega Joker Online

It is very that you have self control and the discipline to adhere to your limit therefore you won’t lose more money. Always remember that playing slots is gambling and in gambling losing is certain. Play only in a quantity which the willing to get rid of so that once losing down the road . convince yourself that possess to paid an extremely good deal cash that provided you with the best entertainment you ever had. Much of the players who don’t set this limit usually end at the top of a involving regrets because their livelihood is ruined being a result a drastic loss in a slot machine game.

#5: Your life can change in a split second. See #4. As it’s a lucrative way your lifetime can change at an online game like Roulette is in take all you own and bet it in one spin from the roulette controls. In slots you GAME SLOT could be playing the way you normally play after which you can boom – suddenly you’ve just won $200k.

Fruit machines are regarded for having more than a few special factors. Features such as nudges, holds and cash ladders are almost limited to fruit poppers. The Hulk fruit machine has the and great deal. The Incredible Hulk slot machine also offers two game boards a person activate quite a few special features and win cash prizes. As you can expect from the sheer number of features on top of the Hulk slot machine, can make the SLOT GAME very busy that carries a lot going on the screen at year ‘round. It may take some being employed to, yet only demands a few spins to get a greater understanding of the Hulk fruit machines.

First, set yourself to play. Be sure to get afflicted with cash. They do not receive vouchers in playing slot. Then, set an be more spend for the day on that program. Once you consumed this amount, stop playing and come back again next day. Do not use the money in precisely one sitting and pengaturan. Next, set your time alarm. Once it rings, stop playing and fail from the casino. Another, tell you to ultimately abandon the equipment once shipped to you the slot tournament. Do not be so greedy believing that you want more wins. However, if you still have money within your roll bank, then health-care professional . still try other slot games. Yes, do not think that machine in had won is that are fortunate enough to help you become win again and again again. No, it will just use up all the and seek it . lose new.

To within the game for enjoyment is special and perform to win is other. It requires associated with money planning and strategy. Salvaging based on basic poker rules, but the big difference is here you go man versus machine.

Quiz shows naturally are life changing with online slots and the bonus game have got a big part within the video slot experience. Two example of UK game shows which are now video slots are Blankety Blank and Sale of the century. Sale for the Century features the authentic music from a 70’s quiz and does really well in reflecting the slightly cheesy involving the computer game. Blankety Blank presents bonus rounds similar to your SLOT GAMING TV reveal.

$5 Million Touchdown is often a 5-reel, 20 pay-line video slot from Vegas Tech about American football. It accepts coins from 1 cent to $10.00, and also the maximum number of coins that you simply could bet per spin is 20 ($200). There are 40 winning combinations, a great jackpot of 500,000 coins, wilds (Referee), scatters (Scatter), 15 free spins, and a bonus ball game. To win the 15 free spins, you have to hit three or more Scatter value. To activate the bonus round, you need to hit two Bonus symbols on the reels. KRATONBET include Referee, Scatter, Cheerleader, and Football players.

…

50 Lions Slots – Available Online Now

First, you have to consider the reality that you can enjoy these games anytime and anywhere good for your health. There is that comfort factor inside that entices people appear online it’s essential to playing. For so long as you have your computer, an internet connection, your own or debit card with you, an individual might be set and able to play. Implies you are able to do this at the comforts for yourself home, within your hotel room while on business trips, and even during lunch time at your house of careers. You don’t have to be troubled about people disturbing you or starting fights and dealing although loud music. It is like having your own private VIP gaming room at your house or anywhere you happen to be in the environment.

When a person go in order for it? Let’s face it, small wins won’t keep you happy for too long. You’re there for the big win, auto glass . should you for the game? Wait until the progressive jackpot become substantial. Why go so as when the jackpot is small?

If http://172.232.249.118/ in order to play with one coin or you want the same payout percentage no matter how many coins you played you would then want perform a multiplier slot fitness machine. Multiplier machines pay out a certain amount of coins for certain symbols. This amount will then be multiplied in the number of coins bet. So, if three cherries pay 10 coins for almost any one coin bet, planning to pay 50 coins of a 5 coin bet. This type of machine doesn’t penalize the participant for not playing highest number of coins GAME ONLINE SLOT deferred to and let. There are no big jackpots in this type of machine. You’re to obtain the most playing periods of money then this can be a machine a person personally.

Lets express that you’re playing on a slots machine at stakes of $1.00 a rotation. Should therefore within the slot machine with $20.00 and hope to come by helping cover their anything over $25.00, as being a quarter profit can be exercised 70 percent of the time through numerical dispensation.

The roulette table always draws a crowd in a really SLOT ONLINE world net casino. The action is almost hypnotizing. Watch the ball roll round and if it lands in your own number, shipped to you. The problem is that numerous 37 or 38 slots for that ball to fall into and it can be for the game are clearly in the homes favor. When roulette, try to find European Roulette which has only 37 slots (no 00) and bear in mind the single number bets carry the worse odds. Consider betting group, rows or lines of numbers and you might spend additional at the table.

If you were lucky enough to win on a movie slot machine, leave that machine. Don’t think that machine will be the ‘lucky machine’ for you. It made you win once definitely will not let you on the next games for certain. Remember that casino wars are regulated by random number generator and is actually because electrically driven. In every second, it changes the mixture of symbols for lot of times. And over of the time, the combinations aren’t in favor of you might. If you still have the time or remaining balance in your allotted money, then perhaps you can try the other slot machines. Look for the video slot that offers high bonuses and high payouts but requiring fewer coins.

Sure, you might want to stop for rounds or days, but the key to winning is by continuing playing in GAMING SLOT GACOR time. This does not indicate that you should forfeit all else and focus on playing-you certainly shouldn’t. Maintaining a balanced lifestyle and online roulette betting will a person achieve satisfaction and happiness, not to bring up a well-heeled bank narrative.

Be aware of how many symbols are stored on the slot machine. When you sit down, the very first thing you should notice is just how many symbols are about the machine. The numbers of symbols are directly proportional into the number of possible combinations you end up being win.

…

The Online Casino Tip For Good Chance Of Winning

The Diamond Bonus Symbol pays very high fixed Jackpot after the Lion symbol. The Diamond bonus is triggered whenever you land one of these bonus symbols on a pay-line.

These three games allow players the following SLOT ONLINE strategies that can help sway the odds in their favor. But keep in mind, anyone could have to on line to play in the games to ensure to obtain the best prospects. If you don’t know what you’re doing, you should be best playing the slots xbox games.

Wasabi San is a 5-reel, 15 pay-line video slot machine with a Japanese dining theme. Wasabi San is an exquisitely delicious world of “Sue Shi,” California hand rolls, sake, tuna makis, and salmon roes. Some Sushi Chef symbols regarding pay-line create winning mixtures. Two symbols reimburse $5, three symbols expend $200, four symbols pay back $2,000, kinds five Sushi Chef symbols pay out $7,500.

Slot machine gaming is a type of GAMING SLOT GACOR gambling, where money certainly the basic unit. You can either make it grow, or watch it fade from your hands. It would bother much if small quantities of money are involved. However, playing the slots wouldn’t work if you only have minimal proposition wagers.

The roulette table always draws an audience in a real world gambling shop. The action is almost hypnotizing. Watch the ball roll round and can lands with your number, you win. The problem is that you will 37 or 38 slots for that ball to fall into and the chances for mafia wars are clearly in the homes favor. If you like roulette, find European Roulette which has only 37 slots (no 00) and keep in mind the single number bets carry the worse possibility. Consider betting group, rows or lines of numbers and you are able to spend much longer at the table.

Something else to factor into your calculation is how much the perks and bonuses you’re getting back from the casino count. If you’re playing from a land-based casino where you’re getting free drinks a person play, then you can subtract the associated with those drinks from you’re hourly amount. (Or you could add the cost of those drinks to the of the entertainment you’re receiving–it’s easliy found . matter of perspective.) My recommendation would be drink top-shelf liquor and premium beers in order to increase the entertainment value you’re consuming. A Heineken could cost $4 GAME ONLINE SLOT is priced at in a sexy restaurant. Drink two Heinekens an hour, and you’ve just lowered what it costs you perform each hour from $75 to $68.

Blackjack. Complete idea with the game is to accumulate cards with point totals as close to twenty one. It should be done without reviewing 21 and afterwards other cards are represented by their number.

Once you’ve turned regarding your Nintendo DS or Nintendo ds lite lite, the unit files will load by way of R4 DS cartridge, lust like they do when making use of the M3 DS Simply. https://site04.angkasa189.com/ takes approximately 2 seconds for the main promises menu to appear, that’s not a problem R4 DS logo the top front screen, and also the menu on the bottom. On the bottom screen you can select one of three options.

…

Winning Video Poker – The Simplest Way

Lucky Charmer has an additional screen bonus feature sturdy fun to play. You will choose between 3 musical pipes and the charmer plays your choice if you could possibly reach the bonus circular. The object that rises out of the baskets will be the one to find your profits. To be able to activate offer round you will be can hit the King Cobra at method to pay the queue.

Tip #2. Know the payout schedule before resting at a slot system SLOT ONLINE . Just like in poker, knowledge of the odds and payouts is vital to creating a good idea.

There are certain things that you require to know before actually starting the sport. It is better you should read extra about sport so that you just play it correctly. Every common misconception among the gamers. They think that past performance can have some impact on the game. Some also think that the long run events can be predicted by a the past results. It is far from true. It really is game of sheer chance. Luck factor is quite crucial in this games. The best part of farmville is it’s easy to locate out and understand. But you need to practice it again and gather. desty.page/koin555 can play free roulette online.

BOOT SLOT 2 – This menu option allows the R4 DS, exactly like the M3 DS, to boot the GBA Slot, or Slot 2, in your Nintendo DS / Ds lite console. To those people that want to get our hands on a GBA Flash card, and need to run GBA Homebrew games and applications as well as Nintendo ds lite. It also adds extra storage for NDS Homebrew, because you can really use a GBA Flash card besides NDS files, as long as you apply the R4 DS as a PASSME / PASSCARD way to go.

Another online gambling GAME ONLINE SLOT myth bought in the form of reverse mindset. You’ve lost five straight hands of Texas Hold ‘Em. The cards are eventually bound to fall to your benefit. Betting with respect to this theory could prove detrimental. Streaks of bad luck don’t necessarily lead along with path outstanding fortune. Regarding what you’ve heard, there is no way to show on the juice and completely control the online. Online casino games aren’t programmed assist you to flawless games after a succession of poor forms. It’s important to bear in mind each previous hand does not have any effect for your next one; just because your last slot pull earned a hefty bonus doesn’t suggest it will continue to take on.

Craps is a of you will complicated games to find out more. It offers wide variety of of bets and includes an etiquette all its own. Some novice gamblers will be intimidated by all the experience at a craps workspace. Many don’t be aware of the difference in the pass line and a don’t pass bet. May perhaps not are aware some bets might offend other players at the table, because superstition plays a large part in GAMING SLOT GACOR craps. Some players holding the dice think a don’t pass bet can be a jinx, it is a bet made directly against their own bet.

If you’re playing a progressive slot and your bankroll is too short perform max coins, move down a coin size. Rather than playing the dollar progressive games, within the quarter progressive games. Prolonged as as achievable play max coins, you can do land the jackpot on that recreation.

…

The Best Jogos Online

Determine how much money and time may afford to lose on that setting. Before you enter the casino, set a plan for your play. Set your time . Playing at slots is indeed , addictive a person can might not notice you already spent all your dollars and time inside the casino.

Slot machines – Look at the highest number of slot machines of various denomination beginning with 1 cent to $100.The payouts within these slot machines are among the highest differing to other casinos each morning east district. It has a non- smoking area too where full family take pleasure in GAME SLOT the technological machines.

The Lord of the Rings Slot machine game is a Pachislo Slot Machine, this means that you will be that will control once the reels will prevent spinning while having your turn. Up-to-date you to infuse some slot machine experience along with a SLOT CASINO bit more skill! The slot machine also carries a mini game that acquired for you to play between spins.

It can be a good idea for anyone to join the slots cub at any casino that you go with. This is one way in which you can lessen amount of money that you lose because you will possess the to get things across the casino free for anybody.

SLOT ONLINE If you hit between the equivalent amount of money to 49% profit, then may do play again with exact same machine. Your odds of of getting the jackpot are greatly high as it may be a “hot slot”. For example, if you commenced spinning for $100 and also have about $100-$149 as profit, very an indication that the slot you are playing is a thing that supplies the best payment.

Although there aren’t any exact strategies that will unquestionably nail you the win in playing slots, here are a couple of tips and methods that will guide you in an individual’s chances of winning. If you use this tips every time you play, you will have a way to grow in profits actually.

Fact: No. There are more losing combos than winning. Also, JAVA 189 of the winning combination occurs n’t. The smaller the payouts, more regarding times those winning combos appear. As well as the larger the payout, the less connected with times that combination definitely going o appear.

…

A Losing Battle – My Casino Consequence

Generally, well-developed body is stronger calculate the charge per spin so a person simply can play in slot machines in accordance to price range. It is usually fun to play in a slot wherein you can have at least 10 spins. Learning how to evaluate a machine is method to to increase your profits.

In a nutshell, the R4 / R4i just card which enables a person to run multimedia files or game files on your DS. No editing among the system files is required; it is strictly a ‘soft mod’ that has no effect on your NDS in any way. You just insert the R4i / R4 card into the GAME SLOT, and the R4 / R4i software will conducted.

Playing free slots can be a great technique to get familiar with the game. Beginners are exposed to virtual slot machine games wherein may place virtual money to place the machine to play mode. The goal is basically to hit the winning combination or combinations. Occasion primarily produced for practice or demo on-line games. Today, online slots is a far cry from its early ancestors: the mechanical slot machines. Whereas the mechanism of this slot machines determines consequence of SLOT CASINO video game in the past, at this point online slots are run by an online program called the random number generator. https://mylink.la/kagura189 operate innovative programs too.

It is really a good idea for one to join the slots cub at any casino that you go and. This is one way that you can lessen won’t be of money that you lose since will possess the to get things across the casino free for shoppers.

3Dice is on the receiving end of plenty of awards inside years typically the industry, including Best Support service SLOT ONLINE Team as well as USA Friendly Casino from the Year, are just a few of their prestigious awards in their trophy storage. Owned and operating by Gold Consulting S.A., the Danmar Investment Group, this casino is fully licensed and regulated by the Curacao Gaming Authority.

Playing a slot machine is primary. First, you place your money in gear. Today’s machines will take all denominations of dues. You can put because much money as specific niche market. This money seem converted into credits can easily be utilized in the system.

The obvious minuses are: the shortage of the background music. Everything you can hear during playing this online slot is the scratching (I’d call it this way) of the moving reels and the bingo-sound in case you win.

…

Rainbow Riches Slot Machine Review

Gambling online has costless gambling and practice games give slots for amusement. While you may possibly earn bonuses or win anything extra when you play free online slots or conceivably for fun, you has the capability to get better at the games. Sometimes, you will discover that online slot providers will provide you chances november 23 even more by joining special ladies clubs.

The value of jackpots on Bar X ranges GAMING SLOT GACOR contingent upon your stake level, but like modification version, the Bar X jackpot is triggered relatively often in comparison to many other online slots.

Wasabi San is a 5-reel, 15 pay-line video slot machine with a Japanese dining theme. Wasabi San can be an exquisitely delicious world of “Sue Shi,” California hand rolls, sake, tuna makis, and salmon roes. A couple of Sushi Chef symbols on their own pay-line create winning options. Two symbols spend $5, three symbols expend $200, four symbols purchase $2,000, several five Sushi Chef symbols pay out $7,500.

Online slot games are a fun selection for those that do not have a lot ofcash. It can be a relatively secure choice. It is an effortless game that doesn’t require any technique or guesswork. Lot not any “slot faces” like couple options poker deals with.

Fruit machines are one of the most sought after form of entertainment in bars, casinos and taverns. Online gaming possibilities have made them the most famous game online too. Fruit machines are available in different types; from penny wagers to wagers in excess of 100 attributes. Another attraction is the free fruit machine which are available from certain internet casinos GAME ONLINE SLOT . You can play on these machines without being nervous about losing bankroll.

SLOT ONLINE If you are playing a progressive slot and your bankroll as well short to play max coins, move down a coin size. Rather than playing the dollar progressive games, play the quarter progressive games. Prolonged as purchase play max coins, could possibly land the jackpot on that application.

In earlier 90’s, way before internet casinos were prevalent, I enjoyed a great game of Roulette at one of my favorite land casinos three or four times a weeks time. ATM 189 , I don’t even to help leave the comforts of my own home to obtain it on issue action.

Familiarize yourself with guidelines of the specific slot tournament than you playing of. Although the actual play will be similar, the payout and re-buy systems may be varied. Some online slot tournaments will enable you to re-buy credits after you have used your initial credits. important to know if tend to be on the best choice board and expect pertaining to being paid out. Each tournament also decides operate will determine the victor. In some slot tournaments, the gamer with essentially the most credits in the end for this established time frame wins. Other tournaments possess a playoff along with a predetermined number of finalists.

…

Play Online Slots – Thrill Guaranteed

The Diamond Bonus Symbol pays the highest fixed Jackpot after the Lion token. The Diamond bonus is triggered whenever you land an bonus symbols on a pay-line.

If you want to play, it is the to plan in advance and be sure how long you seem playing to let you might give yourself finances. You need not be prepared to waste a ton of money on this. Can be a good form of recreation and may also earn for you some cash money. However, losing a lot of money is no longer advisable.

The other they may give you is the chance perform for free for one hour. They can give you a specialized amount of bonus credits to depend on. If you lose them SLOT ONLINE within the hour any trial ends. If you finish up winning in the hour want may have the to keep the winnings using some very specific constraints. You will need to read guidelines and regulations very carefully regarding doing this. DINA189 has its own group of rules in general.

Slots are positioned up to encourage players to play more funds. It is clear to see the more coins one bets, the better the odds and the payouts normally. Most machines allow you to decide the value of the coin that went right play when it comes to. When the payout schedule pays at a higher rate for more coins, happen to be better off playing smaller denominations and maximum silver and gold coins. This concept seems simple, however some jackpots in order to lost by careless play around.

GAME ONLINE SLOT The second period of development in the slot machines was rather calm, fell in on the middle with the twentieth century. The brightest event of the was production of the Big Bertha. However, shortly exercises, diet tips overtopped by even more killing innovation of that time – Super Big Bertha.

Tip#3-Bet the utmost money november 23 the biggest wins. Here’ couldn’t stress as commonplace in general mechanical slot play. Why bet one coin GAMING SLOT GACOR a great deal more could bet three additional and win much considerably more. Since we are dealing with mechanical slots rather than just multi-line video slots, it’s all afford to bet only three gold coins. Players will find that the wins will come more frequently and all round ability to line wins will considerably bigger. I advise this same tip for those progressive type slots like Megabucks and Wheel of Fortune. Ever bet one coin onto the wheel and end up getting the bonus wheel symbol concerning the third wheel only to grind your teeth whenever it happens?? It has happened into the best of us, truly doesn’t ever need that occurs again.

Lets state that you’re using a slots machine at stakes of $1.00 a rotation. Should certainly therefore enter the slot machine with $20.00 and strive to come by helping cover their anything over $25.00, like a quarter profit can be practiced 70 percent of period through numerical dispensation.

…

Ways To Win When You Play Rainbow Riches

This happens, and you’ll need know if you stop to forestall losing further and whenever you continue to obtain back what we have baffled. Tracking the game is another wise move, as end up being determine remedy is a bug action. Maintaining your cool despite that losing assists you think more clearly, thus in order to generate more earnings.

If you wish to try out gambling without risking too much, why not try going toward a of the older casinos GAME ONLINE SLOT that supply some free games in their slot machines just an individual could experiment with playing in their establishments. They’ll ask that fill up some information sheets, but that is it. You get to play regarding slot machines for totally free of charge!

There are certain things you’ll need to know before actually starting recreation. It is better you’ll be able to read these days about SLOT ONLINE the overall game so which you could play it correctly. May well be a common misconception among the gamers. They think that past performance will have some effect the application. Some also think that the future events can be predicted by a the past results. It’s not at all true. From the game of sheer break. WAK 69 is quite important in this game title. The best part of it is that it will be easy fully grasp and consider. But you need to practice it again and put on. You can play free roulette online.